Dataset#

Table of Introduction in PR#

Sensor Datasets with Feature Extraction#

Sensor datasets, recorded by various sensors detecting environmental changes, are crucial for real-time monitoring, decision-making, predictive analysis, and automation.

Types of Sensors#

Temperature Sensors: Measure temperature. Pressure Sensors: Detect pressure variations. Accelerometers and Gyroscopes: Measure acceleration and orientation. Proximity and Light Sensors: Detect object presence and light intensity. Sound Sensors: Capture audio signals. Chemical Sensors: Monitor environmental changes. GPS Sensors: Provide location data. Data is not only collected from temperature sensors, but also from other types of sensors that gather information such as text, video, audio, and various environmental parameters.

Data can exist in any form: text, audio, video, and images

Feature Extraction#

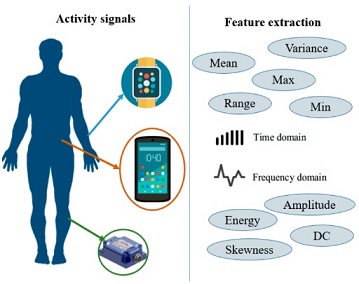

Sensor datasets often contain diverse information collected from various types of sensors.Feature extraction transforms raw sensor data into representative features for analysis, improving data interpretation and prepare for machine learning algorithm. For example, in the following figure, the activity signal introduced in the above section is converted into a feature vector including mean, variance, skewness, and other features.

Activity signal converted into a feature vector (mean, variance, skewness, etc.

Some Examples of Feature extraction#

Feature Extraction from Text:#

Feature extraction from text involves converting text data into numerical representations that can be used for machine learning models. One common method is using the Term Frequency-Inverse Document Frequency (TF-IDF) approach.

Here’s a simple explanation and Python code to perform TF-IDF feature extraction:

Concepts:#

Document: A piece of text. Corpus: A collection of documents. Term Frequency (TF): The frequency of a term 𝑡 in a document 𝑑. Inverse Document Frequency (IDF): Measures how important a term is in the entire corpus. The TF-IDF value increases with the number of times a term appears in a document but is offset by the frequency of the term in the corpus, to adjust for the fact that some words are generally more common than others.

Steps to Compute TF-IDF: 1-Calculate the Term Frequency (TF) for each term in each document. 2-Calculate the Inverse Document Frequency (IDF) for each term. 3-Multiply the TF and IDF values to get the TF-IDF score for each term in each document.

TF-IDF Formula#

TF-IDF stands for Term Frequency-Inverse Document Frequency. It is a numerical statistic intended to reflect how important a word is to a document in a collection or corpus.

Term Frequency (TF)#

The term frequency TF(t,d) is the frequency of term t in document d.

Inverse Document Frequency (IDF)#

The inverse document frequency IDF(t,D) measures how important a term is across the entire corpus D .

Where:

N is the total number of documents in the corpus. Denumerator is the number of documents where the term t appears (i.e., the document frequency of the term).

TF-IDF Score#

The TF-IDF score for a term t in a document d is the product of its TF and IDF values.

This formula adjusts the term frequency of a word by how rarely it appears in the entire corpus, emphasizing words that are more unique to specific documents.

from sklearn.feature_extraction.text import TfidfVectorizer

# Sample documents

documents = [

"If you love God, follow God so that",

"He may also love you and forgive your sins, ",

"for He is Forgiving and Merciful.",

"God has given Him another creation, meaning we have given Him a soul,",

"and 'I have breathed into him of My spirit.'",

"Those who have faith have a greater love for God.",

"I have hastened towards You, my Lord, to seek Your pleasure.",

"Although interpretation in words is clearer,",

"love without words is brighter."

]

# Create the TfidfVectorizer object

vectorizer = TfidfVectorizer()

# Fit and transform the documents to get the TF-IDF matrix

tfidf_matrix = vectorizer.fit_transform(documents)

# Get the feature names (terms)

feature_names = vectorizer.get_feature_names_out()

# Convert the TF-IDF matrix to a dense format and print it

tfidf_dense = tfidf_matrix.todense()

print("TF-IDF Matrix:\n", tfidf_dense)

# Print the feature names

print("\nFeature Names:\n", feature_names)

TF-IDF Matrix:

[[0. 0. 0. 0. 0. 0.

0. 0. 0. 0.37483157 0. 0.

0. 0. 0.55052949 0. 0. 0.

0. 0. 0. 0.37483157 0. 0.

0. 0. 0. 0.24321139 0. 0.

0. 0. 0. 0. 0. 0.

0.37483157 0. 0. 0.37483157 0. 0.

0. 0. 0. 0. 0. 0.27526475

0. ]

[0.37996836 0. 0.27903704 0. 0. 0.

0. 0. 0. 0. 0. 0.37996836

0. 0. 0. 0. 0. 0.

0. 0.32092732 0. 0. 0. 0.

0. 0. 0. 0.24654442 0.37996836 0.

0. 0. 0. 0. 0. 0.37996836

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.27903704

0.32092732]

[0. 0. 0.34597941 0. 0. 0.

0. 0. 0. 0. 0.39791937 0.

0.47112464 0. 0. 0. 0. 0.

0. 0.39791937 0. 0. 0. 0.

0. 0.34597941 0. 0. 0. 0.

0.47112464 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. ]

[0. 0. 0. 0.26905631 0. 0.

0. 0.26905631 0. 0. 0. 0.

0. 0.53811262 0.19758666 0. 0.26905631 0.

0.17457857 0. 0.45449848 0. 0. 0.

0. 0. 0. 0. 0. 0.26905631

0. 0. 0. 0. 0. 0.

0. 0.26905631 0. 0. 0. 0.

0. 0.26905631 0. 0. 0. 0.

0. ]

[0. 0. 0.29057873 0. 0.39568482 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0.25674213 0. 0.3342017 0. 0. 0.

0.39568482 0. 0. 0. 0. 0.

0. 0.3342017 0.39568482 0. 0. 0.

0. 0. 0.39568482 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. ]

[0. 0. 0. 0. 0. 0.

0. 0. 0.36866152 0. 0.31137739 0.

0. 0. 0.27073365 0.36866152 0. 0.

0.47841584 0. 0. 0. 0. 0.

0. 0. 0. 0.23920792 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0.36866152 0.

0. 0. 0.36866152 0. 0. 0.

0. ]

[0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.34529878

0.22404889 0. 0. 0. 0. 0.

0. 0. 0.34529878 0. 0. 0.

0. 0.29164485 0. 0.34529878 0.34529878 0.

0. 0. 0. 0. 0. 0.34529878

0.34529878 0. 0. 0. 0. 0.25357678

0.29164485]

[0. 0.43632467 0. 0. 0. 0.

0.43632467 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0.43632467 0.43632467

0. 0.32042338 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0.36852676 0.

0. ]

[0. 0. 0. 0. 0. 0.52173373

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0.38314517 0. 0.33852961 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0.

0. 0. 0. 0.52173373 0.44066462 0.

0. ]]

Feature Names:

['also' 'although' 'and' 'another' 'breathed' 'brighter' 'clearer'

'creation' 'faith' 'follow' 'for' 'forgive' 'forgiving' 'given' 'god'

'greater' 'has' 'hastened' 'have' 'he' 'him' 'if' 'in' 'interpretation'

'into' 'is' 'lord' 'love' 'may' 'meaning' 'merciful' 'my' 'of' 'pleasure'

'seek' 'sins' 'so' 'soul' 'spirit' 'that' 'those' 'to' 'towards' 'we'

'who' 'without' 'words' 'you' 'your']

The point here is that different words like conjunctions and verbs with various tenses are considered as part of the words. such as ‘and’ ‘has’ ‘have’ ‘forgive’ ‘forgiving’

solve this problem#

To address the issue of different forms of words (like conjunctions, verbs in various tenses, etc.) being treated as separate terms, we can use techniques such as lemmatization and removing stopwords. Lemmatization reduces words to their base or root form, and removing stopwords eliminates common words that are typically not useful for feature extraction.

Here’s a Python code example using nltk and sklearn to perform these preprocessing steps before applying TF-IDF:

from sklearn.feature_extraction.text import TfidfVectorizer

import nltk

from nltk.corpus import stopwords

from nltk.stem import WordNetLemmatizer

from nltk.tokenize import word_tokenize

# Sample documents

documents = [

"If you love God, follow God so that",

"He may also love you and forgive your sins, ",

"for He is Forgiving and Merciful.",

"God has given Him another creation, meaning we have given Him a soul,",

"and 'I have breathed into him of My spirit.'",

"Those who have faith have a greater love for God.",

"I have hastened towards You, my Lord, to seek Your pleasure.",

"Although interpretation in words is clearer,",

"love without words is brighter."

]

# Download NLTK resources (only need to run once)

nltk.download('punkt')

nltk.download('wordnet')

nltk.download('stopwords')

# Initialize the WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

# Get the list of stopwords

stop_words = set(stopwords.words('english'))

def preprocess_text(text):

# Tokenize the text

tokens = word_tokenize(text)

# Lemmatize and remove stopwords

processed_tokens = [

lemmatizer.lemmatize(token.lower(), pos='v') # Specify pos='v' for verbs

for token in tokens if token.lower() not in stop_words and token.isalnum()

]

# Join tokens back to string

return ' '.join(processed_tokens)

# Preprocess the documents

preprocessed_documents = [preprocess_text(doc) for doc in documents]

# Create the TfidfVectorizer object

vectorizer = TfidfVectorizer()

# Fit and transform the preprocessed documents to get the TF-IDF matrix

tfidf_matrix = vectorizer.fit_transform(preprocessed_documents)

# Get the feature names (terms)

feature_names = vectorizer.get_feature_names_out()

# Convert the TF-IDF matrix to a dense format and print it

tfidf_dense = tfidf_matrix.todense()

# Print the feature names

print("\nFeature Names:\n", feature_names)

Output is:#

Feature Names: [‘also’ ‘although’ ‘another’ ‘breathe’ ‘brighter’ ‘clearer’ ‘creation’ ‘faith’ ‘follow’ ‘forgive’ ‘give’ ‘god’ ‘greater’ ‘hasten’ ‘interpretation’ ‘lord’ ‘love’ ‘may’ ‘mean’ ‘merciful’ ‘pleasure’ ‘seek’ ‘sin’ ‘soul’ ‘spirit’ ‘towards’ ‘without’ ‘word’]

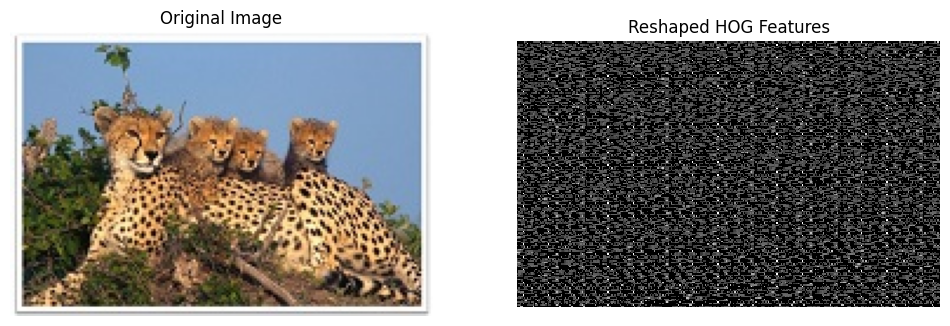

Feature Extraction from Image:#

Feature extraction from images involves transforming raw image data into a set of representative features that can be used for analysis or machine learning tasks. Some of its stages include:

Preprocessing Before extracting features, it’s often necessary to preprocess the images to standardize them and remove noise. Common preprocessing steps include resizing, cropping, normalization, and noise reduction.

Feature Extraction Techniques There are various techniques for extracting features from images. Some popular methods include:

Histogram of Oriented Gradients (HOG) HOG computes the distribution of gradient orientations in localized portions of an image. It’s commonly used for object detection and recognition tasks.

Scale-Invariant Feature Transform (SIFT) SIFT detects and describes local features in an image that are invariant to scale, rotation, and illumination changes. It’s widely used in image matching and object recognition.

Convolutional Neural Networks (CNNs) CNNs are deep learning models that automatically learn hierarchical features from images. They consist of convolutional layers that extract features at different levels of abstraction.

Feature Representation Once features are extracted, they need to be represented in a suitable format for analysis or machine learning algorithms. This could involve reshaping them into vectors or matrices.

Application Extracted features can be used for various tasks such as image classification, object detection, image retrieval, and content-based image retrieval.

Python Libraries for Image Feature Extraction

Popular Python libraries for image feature extraction include OpenCV, scikit-image, and TensorFlow.

Here’s a simple example using CV2 to extract HOG features from an image:

import numpy as np

import cv2

import matplotlib.pyplot as plt

# Load the image

image = cv2.imread(r'IntroductionImages/Cheetah.jpg')

# Check if the image was loaded correctly

if image is None:

raise FileNotFoundError("Image not found. Please check the file path.")

# Convert to grayscale

image_gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# Initialize the HOG descriptor with default parameters

hog = cv2.HOGDescriptor()

# Compute the HOG features

hog_features = hog.compute(image_gray)

# Visualizing the HOG features

# In this simple example, we will not reshape the HOG features but simply display the original image

# For a detailed and direct visualization of the HOG features, you may need to use more advanced techniques.

height, width=np.shape(image_gray)

aspect_ratio = height / width

hog_size=np.size(hog_features)

# Compute new height and width that will fit the HOG size, while keeping aspect ratio

def find_valid_dimensions(hog_size, aspect_ratio):

for h in range(1, hog_size + 1):

if hog_size % h == 0: # h must be a divisor of hog_size

w = hog_size // h

# Check if the aspect ratio is approximately maintained

if np.isclose(h / w, aspect_ratio, rtol=0.1): # Allow some tolerance

return h, w

raise ValueError("Could not find valid dimensions for reshaping HOG features.")

# Calculate new dimensions

new_height, new_width = find_valid_dimensions(hog_size, aspect_ratio)

# Reshape the HOG feature vector

hog_image = np.reshape(hog_features, (new_height, new_width))

hog_image = np.where(hog_image < 0.2 * np.max(hog_image), 0, hog_image)

hog_image = hog_image / np.max(hog_image) if np.max(hog_image) != 0 else hog_image

fig, axes = plt.subplots(1, 2, figsize=(12, 6))

# Display original image

axes[0].imshow(cv2.cvtColor(image, cv2.COLOR_BGR2RGB)) # Convert BGR to RGB

axes[0].set_title('Original Image')

axes[0].axis('off')

# Display HOG features

#axes[1].imshow(hog_image, cmap='gray')

axes[1].imshow(hog_image, cmap='gray', interpolation='nearest', vmin=0, vmax=np.max(hog_image))

axes[1].set_title("Reshaped HOG Features")

axes[1].axis('off')

plt.show()

print(f"HOG Features shape: {hog_features.shape}")

HOG Features shape: (56700,)

Correct above code : problem is reshaping Hog Feature#

This code loads an example image, converts it to grayscale, and extracts HOG features. It then displays the original image alongside the HOG features

Another feature from image is histogram. The normalized histogram provides a probability distribution of pixel intensities in the grayscale image, highlighting the frequency of each intensity value across the entire image. following figure is Histogram of above cheetah.jpg

Another feature extracted from the image is the histogram. The normalized histogram provides a probability distribution of pixel intensities in the grayscale image, illustrating how frequently each intensity value occurs throughout the image. The x-axis represents the pixel intensity values, ranging from 0 (black) to 1 (white), while the y-axis shows the normalized frequency of each intensity value. To obtain a histogram of the image, the following code can be added:.

import numpy as np

hist, bins = np.histogram(image_gray, bins=256, range=(0, 1))

# Normalize the histogram

hist_normalized = hist / hist.sum()

The following figure shows the normalized histogram for the image ‘cheetah.jpg’.

Interpreting the histogram can provide insights into the image’s composition. Peaks in the histogram correspond to intensity values that occur frequently. In an image with a distinct target (like the cheetah) and background, the histogram might show two or more peaks. One peak could represent the intensity values of the target (cheetah), while another could represent the background (e.g., grass, sky). By analyzing these peaks, we can distinguish between different regions of the image.

We can add code to detect corners in an image using the Harris Corner Detection method from the OpenCV library. This feature extraction technique identifies points in the image where the intensity changes significantly in multiple directions, which typically corresponds to corners. Main change of code:

import cv2

# Detect corners using Harris Corner Detection

image_gray_cv2 = (image_gray * 255).astype(np.uint8) # Convert to uint8 for OpenCV

corners = cv2.cornerHarris(image_gray_cv2, blockSize=2, ksize=3, k=0.04)

corners_dilated = cv2.dilate(corners, None) # Dilate to mark the corners

image_with_corners = np.copy(image)

image_with_corners[corners_dilated > 0.01 * corners_dilated.max()] = [255, 0, 0] # Mark corners in red