Gaussian Mixture Model#

To estimate the parameters of a Gaussian Mixture Model (GMM) using maximum likelihood over a given dataset \(\{x_i; i = 1, \ldots, n\}\), you can follow these steps:

Model Definition:#

The GMM can be defined as:

where:

\(\alpha_j\) are the mixing coefficients, which must satisfy \(0 \leq \alpha_j \leq 1\) and \(\sum_{j=1}^{M} \alpha_j = 1\).

\(\mathcal{N}(x; \mu_j, \sigma_j)\) is the normal (Gaussian) distribution with mean \(\mu_j\) and standard deviation \(\sigma_j\).

Maximum Likelihood Estimation:#

The likelihood of the data given the parameters is:

The log-likelihood is:

we maximize the expected log-likelihood with respect to the parameters \(\alpha_j\), \(\mu_j\), and \(\sigma_j\).

Solving for \(\alpha_j\)#

Given that (\sum_{j=1}^{M} \alpha_j = 1) and (\sum_{j=1}^{M} \sum_{i=1}^{n} \gamma_{ij} = n), we get:

Thus:

Substituting (\lambda) back into the equation for (\alpha_j):

Final Form#

Therefore, the update for the mixing coefficients (\alpha_j) is given by:

This completes the derivation of the mixing coefficients (\alpha_j) in the context of the EM algorithm for GMMs.

Derivative with Respect to Mixing Coefficients \(\alpha_j\)#

The expected log-likelihood with respect to \(\alpha_j\) is:

To find the update for \(\alpha_j\), we take the derivative and set it to zero. First, we focus on the term inside the log function:

Using the chain rule, the derivative of the log term with respect to (\alpha_j) is:

Define :

Summing over all data points (i):

Next, we consider the derivative of the constraint term:

Combining the derivatives from the log-likelihood term and the constraint term, we get:

Summing over all (j):

Substitute to \( \sum_{i=1}^{n} \gamma_{ij} = \alpha_j\lambda \)

Thus, the update for \(\alpha_j\) is:

Derivative with Respect to Means \(\mu_j\)#

The expected log-likelihood with respect to \(\mu_j\) is:

Using it,

Solving for \(\mu_j\) gives:

Derivative with Respect to Standard Deviations \(\sigma_j\)#

Taking the derivative with respect to \(\sigma_j\):

Solving for \(\sigma_j\) gives:

Expectation-Maximization (EM) Algorithm:#

The EM algorithm is typically used to find the maximum likelihood estimates of the parameters.

Initialization: Initialize the parameters \(\alpha_j\), \(\mu_j\), and \(\sigma_j\).

Expectation Step (E-Step): Compute the posterior probabilities (responsibilities) for each data point \(x_i\) belonging to each component \(j\): $\( \gamma_{ij} = \frac{\alpha_j \mathcal{N}(x_i; \mu_j, \sigma_j)}{\sum_{k=1}^{M} \alpha_k \mathcal{N}(x_i; \mu_k, \sigma_k)} \)$

Maximization Step (M-Step): Update the parameters using the responsibilities:

Iteration:#

Repeat the E-step and M-step until convergence, i.e., until the change in the log-likelihood is below a certain threshold.

Example Code Using Python and Scikit-learn#

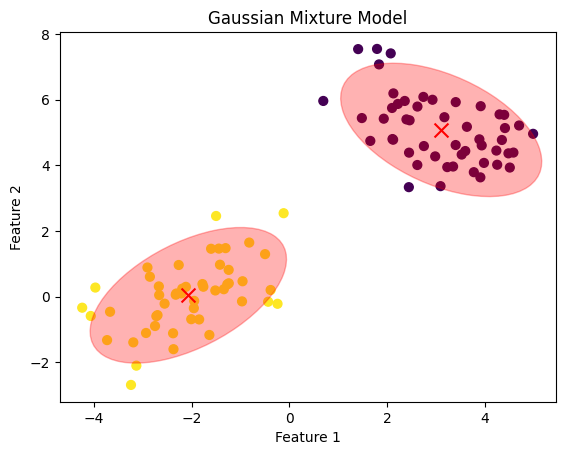

Synthetic Data

import numpy as np

from sklearn.mixture import GaussianMixture

import matplotlib.pyplot as plt

# Generate sample data with 2-dimensional features

np.random.seed(0)

n_samples = 100

# Generate data from two different 2D Gaussian distributions

mean1 = [-2, 0]

cov1 = [[1, 0.5], [0.5, 1]]

X1 = np.random.multivariate_normal(mean1, cov1, n_samples // 2)

mean2 = [3, 5]

cov2 = [[1, -0.5], [-0.5, 1]]

X2 = np.random.multivariate_normal(mean2, cov2, n_samples // 2)

# Combine the datasets

X = np.vstack((X1, X2))

# Fit a Gaussian Mixture Model with 2 components

gmm = GaussianMixture(n_components=2, random_state=0)

gmm.fit(X)

# Predict the component assignments

labels = gmm.predict(X)

# Plot the data and the GMM components

plt.scatter(X[:, 0], X[:, 1], c=labels, s=40, cmap='viridis', marker='o')

plt.scatter(gmm.means_[:, 0], gmm.means_[:, 1], c='red', s=100, marker='x')

plt.title('Gaussian Mixture Model')

plt.xlabel('Feature 1')

plt.ylabel('Feature 2')

# Plot the covariances as ellipses

from matplotlib.patches import Ellipse

def plot_cov_ellipse(cov, pos, nstd=2, **kwargs):

"""

Plots an ellipse representing the covariance matrix cov centered at pos

"""

eigvals, eigvecs = np.linalg.eigh(cov)

order = eigvals.argsort()[::-1]

eigvals, eigvecs = eigvals[order], eigvecs[:, order]

theta = np.degrees(np.arctan2(*eigvecs[:, 0][::-1]))

width, height = 2 * nstd * np.sqrt(eigvals)

ellip = Ellipse(xy=pos, width=width, height=height, angle=theta, **kwargs)

return ellip

ax = plt.gca()

for i in range(2):

cov = gmm.covariances_[i]

mean = gmm.means_[i]

ellip = plot_cov_ellipse(cov, mean, nstd=2, alpha=0.3, color='red')

ax.add_artist(ellip)

plt.show()

Example : Applying GMM to an Image#

import numpy as np

import matplotlib.pyplot as plt

from sklearn.mixture import GaussianMixture

import cv2

#from skimage import io

# Step 1: Load the image

image = cv2.imread(r'./BayesEstimationImages/cat.jpg')

# Check if the image was loaded

if image is None:

raise FileNotFoundError("Image not found. Please check the file path.")

#image = io.imread('E:/HadiSadoghiYazdi/SadoghiSite/courses/PR/BayesEstimation/BayesEstimationImages/cat.jpg') # Replace with your image path

#image = cv2.imread('E:/HadiSadoghiYazdi/SadoghiSite/courses/PR/BayesEstimation/BayesEstimationImages/cat.jpg')

#image_rgb = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Step 2: Preprocess the image data

# Reshape the image to a 2D array of shape (num_pixels, 3)

pixels = image.reshape(-1, 3)

# Step 3: Fit the GMM

n_components = 4 # Number of segments (clusters)

gmm = GaussianMixture(n_components=n_components, random_state=0)

gmm.fit(pixels)

# Predict the cluster labels for each pixel

labels = gmm.predict(pixels)

# Step 4: Reshape the labels to the original image dimensions

segmented_image = labels.reshape(image.shape[:2])

# Step 5: Visualize the segmented image

# Create an RGB image where each cluster is represented by a unique color

colored_segments = np.zeros_like(image)

for i in range(n_components):

colored_segments[segmented_image == i] = np.random.randint(0, 255, size=3)

# Display the original and segmented images

fig, ax = plt.subplots(1, 2, figsize=(12, 6))

ax[0].imshow(image)

ax[0].set_title('Original Image')

ax[0].axis('off')

ax[1].imshow(colored_segments)

ax[1].set_title('Segmented Image')

ax[1].axis('off')

plt.show()

HomeWork: After gaining the following experience, explain the routine and the changes required to capture suitable digit generation for each number.#

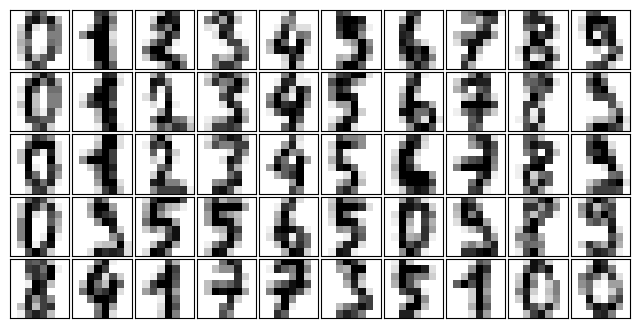

GMMs for Generating New Data#

We just saw a simple example of using a GMM as a generative model in order to create new samples from the distribution defined by the input data. Here we will run with this idea and generate new handwritten digits from the standard digits corpus that we have used before.

To start with, let’s load the digits data using Scikit-Learn’s data tools:

import matplotlib.pyplot as plt

from sklearn.datasets import load_digits

digits = load_digits()

def plot_digits(data):

fig, ax = plt.subplots(5, 10, figsize=(8, 4),

subplot_kw=dict(xticks=[], yticks=[]))

fig.subplots_adjust(hspace=0.05, wspace=0.05)

for i, axi in enumerate(ax.flat):

im = axi.imshow(data[i].reshape(8, 8), cmap='binary')

im.set_clim(0, 16)

plot_digits(digits.data)

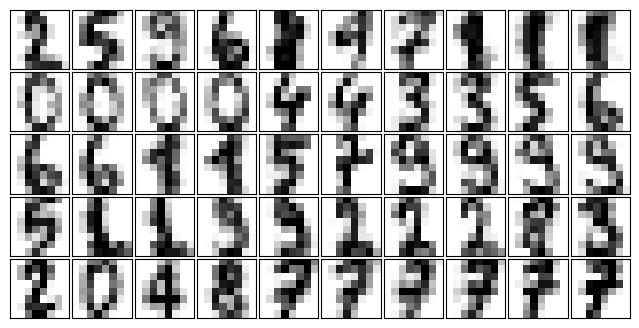

We have nearly 1,800 digits in 64 dimensions, and we can build a GMM on top of these to generate more. GMMs can have difficulty converging in such a high-dimensional space, so we will start with an invertible dimensionality reduction algorithm on the data. Here we will use a straightforward PCA, asking it to preserve 99% of the variance in the projected data:

from sklearn.decomposition import PCA

from sklearn.mixture import GaussianMixture

pca = PCA(0.99, whiten=True)

data = pca.fit_transform(digits.data)

gmm = GaussianMixture(100, covariance_type='full', random_state=0)

gmm.fit(digits.data)

#GMM as a generative model

data_new, label_new = gmm.sample(100)

#digits_new = pca.inverse_transform(data_new)

#plot_digits(digits_new)

plot_digits(data_new)

HomeWork End