Kernel Regression#

Your introduction to kernel regression provides a clear overview of the method, its applications, and the theoretical framework. Below is a refined version of the text, maintaining the key details and improving clarity.

Introduction#

Kernel regression is a widely employed technique in various fields, including image denoising and reconstruction, telecommunications, and prediction and decision-making in noisy environments. This non-parametric method is used to estimate complex non-linear relationships between explanatory and response variables. Given a set of independent and identically distributed (i.i.d) data points \(\{(X_i, Y_i)\}_{i=1}^n\), a regression model is defined as:

Here, \(\epsilon\) represents a zero-mean error term with variance \(\sigma^2\), while \(m(\cdot)\) denotes an unknown smooth nonlinear function.

Problem formulation#

The challenge of determining the \(m(\cdot)\) function, which effectively models the relationship between data points without making assumptions, has been addressed through various methods. The regression function \(m\) is estimated using the conditional expectation of \(y\) given \(x\):

In non-parametric problems, where the joint and marginal density functions are entirely unknown, kernel density estimation (Parzen estimator) is employed to approximate these functions:

where \(h\) indicates the bandwidth, and \(K\) is a kernel function that satisfies:

The \(m(\cdot)\) function is then achieved through a local weighted average, commonly known as the Nadaraya-Watson estimator:

If \( h_i \) goes to small values we have,

Again with more details Non-parametric regression defines the relationship between response \(Y\) and explanatory \(X\) variables \(\{(X_i, Y_i)\}_{i=1}^n\) by an unknown \(m(\cdot)\) function as in Eq. (1). Using the expected loss viewpoint in the kernel regression problem leads to the cost function of what actually occurred \(y\) and what the model predicted \(m(\cdot)\):

where \(L\) indicates the loss function. Taking the derivative of Eq. (5) with respect to \(m(\cdot)\) gives the best estimator:

Employing the square loss in Eq. (6) and taking the derivative with respect to \(m(\cdot)\) results in the unknown \(m(x)\) function as follows:

which is the ordinary Nadaraya-Watson estimator.

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

# Kernel function: Gaussian kernel

def gaussian_kernel(x, X, bandwidth):

return np.exp(-0.5 * ((x - X) / bandwidth) ** 2)

# Nadaraya-Watson estimator

def nadaraya_watson(x, X_train, y_train, bandwidth):

weights = gaussian_kernel(x, X_train, bandwidth)

return np.sum(weights * y_train) / np.sum(weights)

# Generate synthetic data

np.random.seed(0)

x = np.linspace(-3, 3, 100)

y = 2 + 3 * x + 4 * x**2 + np.random.normal(0, 2, x.shape)

# Split the data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=42)

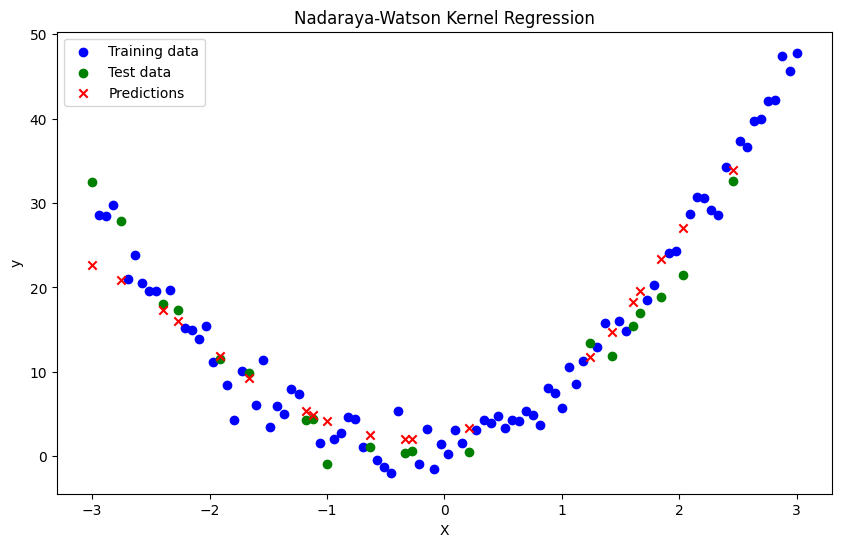

# Apply Nadaraya-Watson estimator

bandwidth = 0.5

y_pred = np.array([nadaraya_watson(xi, X_train, y_train, bandwidth) for xi in X_test])

# Evaluate the model

mse = mean_squared_error(y_test, y_pred)

print(f"Mean Squared Error: {mse}")

# Plot the results

plt.figure(figsize=(10, 6))

plt.scatter(X_train, y_train, label='Training data', color='blue')

plt.scatter(X_test, y_test, label='Test data', color='green')

plt.scatter(X_test, y_pred, label='Predictions', color='red', marker='x')

plt.title('Nadaraya-Watson Kernel Regression')

plt.xlabel('X')

plt.ylabel('y')

plt.legend()

plt.show()

Mean Squared Error: 13.536508293401408

Kernel Regression by correntropy loss#

Derive the closed form of kernel regression using the Correntropy-Induced Metric (CIM) as follows,

Define the Correntropy Loss Function#

Correntropy is a similarity measure between two random variables that is robust to non-Gaussian noise and outliers. The loss function based on correntropy is:

For the kernel regression problem, we consider the expected loss:

Write the Expected Loss in Integral Form#

Expressing the expected loss in terms of an integral:

Take the Derivative with Respect to \( m(x) \)#

To find the optimal function \( m(x) \), we need to take the derivative of \( V_{\text{CIM}} \) with respect to \( m(x) \) and set it to zero:

The derivative inside the integral is:

Thus, the integral becomes:

Simplify the Integral#

Rewriting the equation in terms of the joint density function \( f_{X,Y}(x, y) \):

Discrete Approximation with Kernel Density#

In practice, we approximate the continuous distribution using kernel density estimation. Let \( K_h \) be the kernel function with bandwidth \( h \), and we have a sample \(\{(X_i, Y_i)\}_{i=1}^n\). The estimator for \( f_{X,Y}(x, y) \) is:

Substituting this into the integral:

Evaluate the Integral#

The Dirac delta function \( \delta(y - Y_i) \) picks out the value at \( y = Y_i \):

Solve for \( m(x) \)#

To solve for \( m(x) \), we need to isolate \( m(x) \). This involves solving a nonlinear equation. One approach is to use an iterative method such as fixed-point iteration or gradient descent. However, for simplicity, let’s rewrite the expression in a more familiar form:

Rearranging terms, we get:

Thus, the closed form of the estimator \( \hat{m}(x) \) can be written as:

Iterative Solution#

Since \( m(x) \) appears on both sides of the equation, we solve it iteratively:

. Initialize \( m(x) \) (e.g., using the Nadaraya-Watson estimator). . Update \( m(x) \) using the equation above until convergence.

Finally

The kernel regression estimator with correntropy loss is given by:

This form requires an iterative solution to find \( m(x) \) because it is implicitly defined.

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

# Kernel functions: Gaussian kernels

def gaussian_kernel(x, X, bandwidth):

return np.exp(-0.5 * ((x - X) / bandwidth) ** 2)

def gaussian_correntropy(u, sigma):

return np.exp(-0.5 * (u / sigma) ** 2)

# Correntropy-induced Kernel Regression

def correntropy_kernel_regression(x, X_train, y_train, bandwidth, sigma, tol=1e-5, max_iter=100):

weights = gaussian_kernel(x, X_train, bandwidth)

m_x = np.mean(y_train) # Initialize m(x) with the mean of y_train

for _ in range(max_iter):

m_x_new = np.sum(y_train * np.exp(-0.5 * ((y_train - m_x) / sigma) ** 2) * weights) / np.sum(np.exp(-0.5 * ((y_train - m_x) / sigma) ** 2) * weights)

# Check for convergence

if np.abs(m_x_new - m_x) < tol:

break

m_x = m_x_new

return m_x

# Generate synthetic data

np.random.seed(0)

x = np.linspace(-3, 3, 100)

y = 2 + 3 * x + 4 * x**2 + np.random.normal(0, 2, x.shape)

# Introduce outliers

y[::10] += 120 * (np.random.rand(10) - 0.5)

# Split the data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=42)

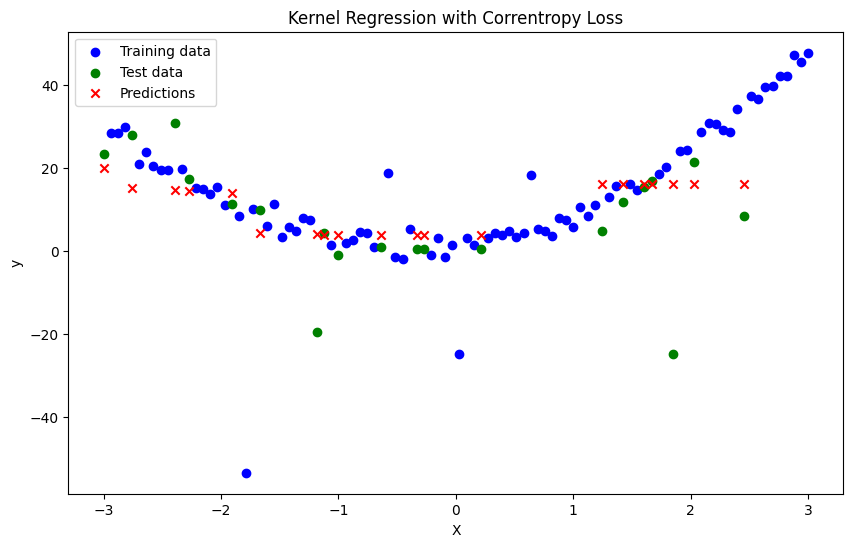

# Apply Correntropy-induced Kernel Regression

bandwidth = 1.1

sigma = 2.2

y_pred = np.array([correntropy_kernel_regression(xi, X_train, y_train, bandwidth, sigma) for xi in X_test])

# Evaluate the model

mse = mean_squared_error(y_test, y_pred)

print(f"Mean Squared Error: {mse}")

# Plot the results

plt.figure(figsize=(10, 6))

plt.scatter(X_train, y_train, label='Training data', color='blue')

plt.scatter(X_test, y_test, label='Test data', color='green')

plt.scatter(X_test, y_pred, label='Predictions', color='red', marker='x')

plt.title('Kernel Regression with Correntropy Loss')

plt.xlabel('X')

plt.ylabel('y')

plt.legend()

plt.show()

Mean Squared Error: 150.4070291748456

Homework 1#

Correct the above code and write the perfect mathematics and code for log_cosh_loss, quantile_loss, and tukey_loss.

Weighted Sum of Squared Errors#

We used kernel regression idea in local linear regression, instead of fitting a constant function \(m(x)\), we fit a linear function \(m(x) \approx a + b(x - x_i)\) around each point \(x\). The goal is to estimate the parameters \(a\) and \(b\) by minimizing a weighted sum of squared errors.

The objective function to minimize is:

where \(K_h\) is a kernel function with bandwidth \(h\). The Gaussian kernel is commonly used:

Matrix Formulation#

To solve this, we can set up the problem in matrix form. Define:

\( \mathbf{y} \) as the vector of observed responses.

\( \mathbf{X} \) as the design matrix of predictors.

For local linear regression, we augment the design matrix to include the linear term:

Define the weight matrix \( \mathbf{W} \) as a diagonal matrix with the kernel weights:

The parameters \( \mathbf{\theta} \) (which include \( a \) and \( b \)) are estimated by minimizing:

Solution#

The solution to the weighted least squares problem is given by:

This yields the estimates for \( a \) and \( b \).

Local Linear Estimate#

The local linear estimate of \( m(x) \) at a point \( x \) is:

where \( a \) is the first component of \( \mathbf{\theta} \).

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

# Kernel function: Gaussian kernel

def gaussian_kernel(x, X, bandwidth):

return np.exp(-0.5 * ((x - X) / bandwidth) ** 2)

# Local Linear Regression

def local_linear_regression(x, X_train, y_train, bandwidth):

weights = gaussian_kernel(x, X_train, bandwidth)

W = np.diag(weights)

X_augmented = np.vstack([np.ones_like(X_train), X_train - x]).T

theta = np.linalg.inv(X_augmented.T @ W @ X_augmented) @ X_augmented.T @ W @ y_train

return theta[0]

# Generate synthetic data

np.random.seed(0)

x = np.linspace(-3, 3, 100)

y = 2 + 3 * x + 4 * x**2 + np.random.normal(0, 2, x.shape)

# Split the data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=42)

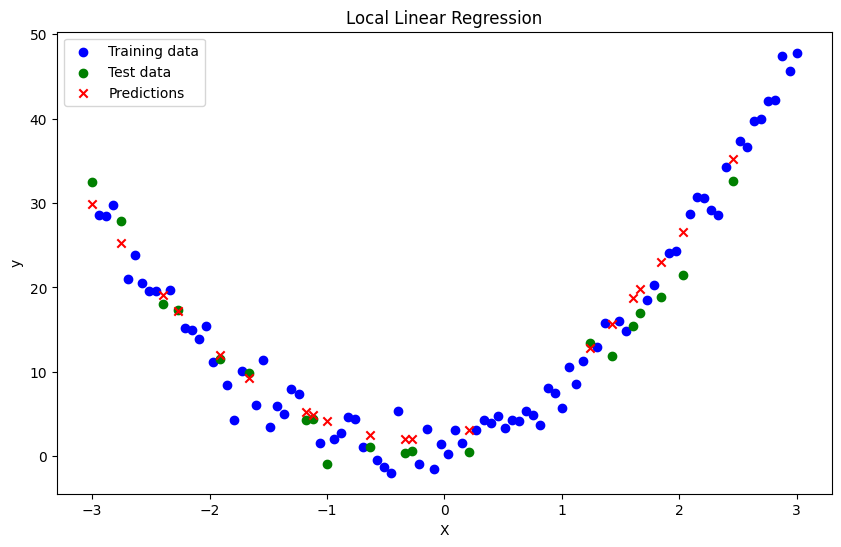

# Apply Local Linear Regression

bandwidth = 0.5

y_pred = np.array([local_linear_regression(xi, X_train, y_train, bandwidth) for xi in X_test])

# Evaluate the model

mse = mean_squared_error(y_test, y_pred)

print(f"Mean Squared Error: {mse}")

# Plot the results

plt.figure(figsize=(10, 6))

plt.scatter(X_train, y_train, label='Training data', color='blue')

plt.scatter(X_test, y_test, label='Test data', color='green')

plt.scatter(X_test, y_pred, label='Predictions', color='red', marker='x')

plt.title('Local Linear Regression')

plt.xlabel('X')

plt.ylabel('y')

plt.legend()

plt.show()

Mean Squared Error: 6.924919926410908

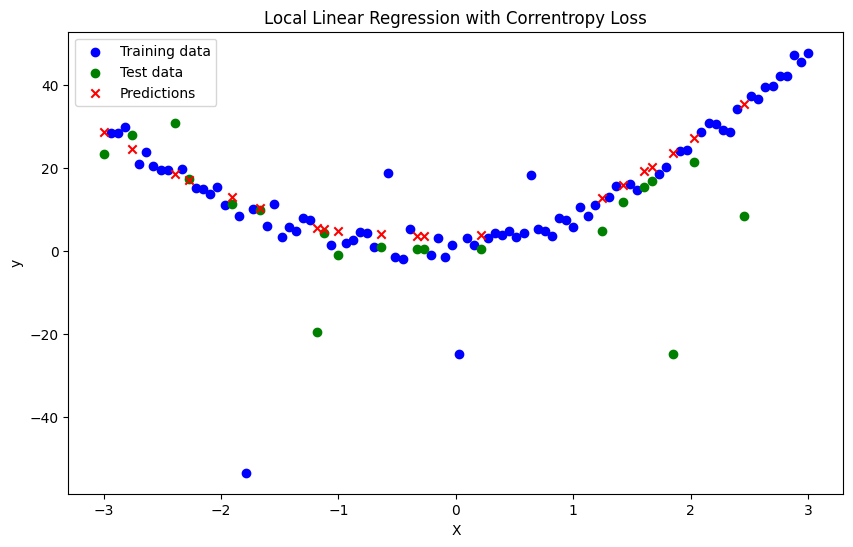

Weighted Sum of Squared Errors with Correntropy Loss#

Correntropy is defined as:

where \( K_\sigma \) is a kernel function with bandwidth \( \sigma \). For simplicity, we use the Gaussian kernel for \( K_\sigma \):

Local Linear Regression with Correntropy Loss#

We aim to fit a linear function \( m(x) \approx a + b(x - x_i) \) by minimizing the correntropy loss. The objective function becomes:

Matrix Formulation#

To solve this problem, we can set up the objective function and compute the parameters \( a \) and \( b \).

Derivation#

The correntropy-based objective function to minimize is:

Mathematical Derivation#

The correntropy-based local linear regression objective function is:

To solve this, we can use gradient descent to optimize the parameters \( a \) and \( b \). The gradients of the objective function with respect to \( a \) and \( b \) are derived as follows:

Since the correntropy loss involves an exponential function, the solution cannot be obtained analytically as in the case of the squared error. We need to use numerical optimization techniques such as gradient descent. Using these gradients, we can update \( a \) and \( b \) iteratively using gradient descent.

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

# Kernel functions: Gaussian kernels

def gaussian_kernel(x, X, bandwidth):

return np.exp(-0.5 * ((x - X) / bandwidth) ** 2)

def gaussian_correntropy(u, sigma):

return np.exp(-0.5 * (u / sigma) ** 2)

# Local Linear Regression with Correntropy Loss

def correntropy_local_linear_regression(x, X_train, y_train, bandwidth, sigma, learning_rate=0.09, epochs=3000):

weights = gaussian_kernel(x, X_train, bandwidth)

# Initialize parameters

a = 0

b = 0

for _ in range(epochs):

gradient_a = 0

gradient_b = 0

for i in range(len(X_train)):

weight = weights[i]

prediction = a + b * (X_train[i] - x)

error = y_train[i] - prediction

correntropy = gaussian_correntropy(error, sigma)

gradient_a -= weight * correntropy * error / sigma**2

gradient_b -= weight * correntropy * error * (X_train[i] - x) / sigma**2

a -= learning_rate * gradient_a

b -= learning_rate * gradient_b

return a + b * (x - x)

# Generate synthetic data

np.random.seed(0)

x = np.linspace(-3, 3, 100)

y = 2 + 3 * x + 4 * x**2 + np.random.normal(0, 2, x.shape)

# Introduce outliers

y[::10] += 120 * (np.random.rand(10) - 0.5)

# Split the data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=42)

# Apply Local Linear Regression with Correntropy Loss

bandwidth = 1.1

sigma = 2.5

y_pred = np.array([correntropy_local_linear_regression(xi, X_train, y_train, bandwidth, sigma) for xi in X_test])

# Evaluate the model

mse = mean_squared_error(y_test, y_pred)

print(f"Mean Squared Error: {mse}")

# Plot the results

plt.figure(figsize=(10, 6))

plt.scatter(X_train, y_train, label='Training data', color='blue')

plt.scatter(X_test, y_test, label='Test data', color='green')

plt.scatter(X_test, y_pred, label='Predictions', color='red', marker='x')

plt.title('Local Linear Regression with Correntropy Loss')

plt.xlabel('X')

plt.ylabel('y')

plt.legend()

plt.show()

Mean Squared Error: 205.75919421745843

Bias-Variance Analysis of Kernel Regression#

The Nadaraya-Watson estimator for kernel regression is defined as:

To simplify notation, we introduce weights:

Assumptions#

A0: For simplicity, assume \( x \in \mathbb{R} \).

A1: There is a true smooth function \( f(x) \) such that: $\( y = f(x) + \epsilon \)\( where \) \epsilon \( is independently sampled for each \) x \( from a distribution \) P_\epsilon \( with \) \mathbb{E}[\epsilon] = 0 \( and \) \text{Var}(\epsilon) = \sigma^2 $.

A2: The kernel \( K(z) \) is smooth, \( \int_{\mathbb{R}} K(z)dz = 1 \), \( \int_{\mathbb{R}} zK(z)dz = 0 \), and we denote: $\( \sigma_b^2 = \int_{\mathbb{R}} z^2 K(z)dz, \quad \)$

Squared Integral of the Kernel (( \gamma_b^2 )):

[ \gamma_b^2 = \int_{\mathbb{R}} K^2(z) dz ] This quantity is the L2-norm squared of the kernel function. It plays a role in measuring the smoothness of the kernel in terms of how “sharp” or “spread out” it is. A larger ( \gamma_b^2 ) implies a kernel that is more concentrated (or less smooth), while a smaller ( \gamma_b^2 ) suggests that the kernel is more spread out.

Expectation of \( \hat{m}(x) \)#

By expanding \( f \) in a Taylor series around \( x \):

Given \( y_i = f(X_i) + \epsilon_i \):

Since \( \mathbb{E}[\epsilon_i] = 0 \):

Substituting the Taylor expansion of \( f(X_i) \):

Using the properties of the kernel \( K \):

Hence,

The bias is:

Variance of \( \hat{m}(x) \)#

The variance is:

Since \( y_i = f(X_i) + \epsilon_i \):

Using the kernel properties:

Mean Squared Error (MSE)#

Combining bias and variance:

Optimal Bandwidth#

To minimize MSE, take the derivative with respect to \( h \) and set to zero:

Finally#

Bias: \(\text{Bias}(\hat{m}(x)) \approx \frac{1}{2} f''(x) h^2 \sigma_b^2\)

Variance: \(\text{Var}(\hat{m}(x)) \approx \frac{\sigma^2 \gamma_b^2}{nh}\)

MSE: \(\text{MSE}(\hat{m}(x)) \approx \left(\frac{1}{2} f''(x) h^2 \sigma_b^2\right)^2 + \frac{\sigma^2 \gamma_b^2}{nh}\)

Optimal Bandwidth: \(h \propto n^{-1/5}\)

This analysis shows the trade-off between bias and variance in kernel regression and how the bandwidth parameter \( h \) can be chosen to minimize the mean squared error.

The regularization term involving \( f''(x) \) and its derivative with respect to \( m(x) \) needs careful handling. Let’s revisit the derivation with the proper relationship between \( f''(x) \) and \( m(x) \).

Objective Function#

Step-by-Step Derivation#

Expected Squared Loss Term:

As before, we start with the expected squared loss term:

Regularization Term:

The regularization term involving \( f''(x) \):

The term \( f''(x) \) is the second derivative of the unknown function \( m(x) \), which can be expressed as \( m''(x) \). The derivative of \( (m''(x))^2 \) with respect to \( m(x) \) is computed as:

Since \( \frac{\partial m''(x)}{\partial m(x)} = m'''(x) \):

Combining Terms:

Combining the derivatives from both the expected squared loss and the regularization term:

Simplifying:

Solving for \( m(x) \)#

To solve for \( m(x) \), we need to balance the data fitting term with the regularization term involving the second and third derivatives of \( m(x) \):

To estimate \( m''(x) \) and \( m'''(x) \), we need to use numerical differentiation. We can approximate the second and third derivatives of the function \( m(x) \) based on the available data points. Let’s go through the steps to obtain these derivatives and substitute them into the estimator \( \hat{m}(x) \).

Step-by-Step Process#

Kernel Density Estimates for \( \hat{m}(x) \)

The Nadaraya-Watson estimator for \( \hat{m}(x) \) is given by:

Second Derivative \( m''(x) \)

The second derivative can be estimated using finite differences. For example, a central difference approximation is:

Third Derivative \( m'''(x) \)

The third derivative can also be approximated using finite differences:

Substituting into the Estimator

Substitute the estimates of \( m''(x) \) and \( m'''(x) \) into the regularization term:

Detailed Formulation#

Nadaraya-Watson Estimator:

Second Derivative Approximation:

Third Derivative Approximation:

Final Estimator with Regularization:

Simplified Expression#

Combining these approximations into a single expression, we have:

This final form takes into account the kernel density estimates as well as the regularization term that penalizes high curvature.

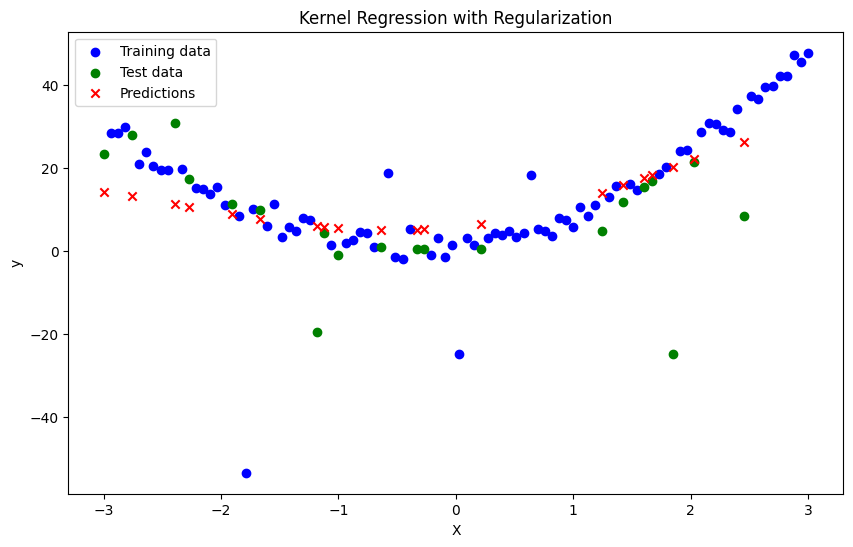

Homework 2#

In the following code, a regularization term has been added. Correct some issues to improve the fit.

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

# Gaussian kernel function

def gaussian_kernel(x, X, bandwidth):

return np.exp(-0.5 * ((x - X) / bandwidth) ** 2)

# Compute the local linear regression estimate

def local_linear_regression(x, X_train, y_train, bandwidth):

weights = gaussian_kernel(x, X_train, bandwidth)

weighted_sum = np.sum(weights * y_train)

normalization = np.sum(weights)

return weighted_sum / normalization

# Compute second derivative using finite differences

def compute_second_derivative(m, x, h):

m_x_plus_h = m(x + h)

m_x_minus_h = m(x - h)

m_x_plus_2h = m(x + 2*h)

m_x_minus_2h = m(x - 2*h)

m_prime_prime = (m_x_plus_2h - 2*m_x_plus_h + m_x_minus_2h) / (h ** 2)

return m_prime_prime

# Kernel Regression with Regularization

def kernel_regression_with_regularization(X_train, y_train, X_test, bandwidth, lambda_, sigma_b, h):

def m_estimator(x):

return local_linear_regression(x, X_train, y_train, bandwidth)

y_pred = np.zeros_like(X_test)

for i, x in enumerate(X_test):

m_x = m_estimator(x)

m_prime_prime = compute_second_derivative(m_estimator, x, h)

# Regularization term

regularization_term = lambda_ * (0.5 * h ** 2 * sigma_b ** 2) * m_prime_prime

y_pred[i] = m_x + regularization_term

return y_pred

# Generate synthetic data

np.random.seed(0)

x = np.linspace(-3, 3, 100)

y = 2 + 3 * x + 4 * x**2 + np.random.normal(0, 2, x.shape)

# Introduce outliers

y[::10] += 120 * (np.random.rand(10) - 0.5)

# Split the data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=42)

# Apply Kernel Regression with Regularization

bandwidth = 1.1

lambda_ = 0.1

sigma_b = 1.0

h = 0.1

y_pred = kernel_regression_with_regularization(X_train, y_train, X_test, bandwidth, lambda_, sigma_b, h)

# Evaluate the model

mse = mean_squared_error(y_test, y_pred)

print(f"Mean Squared Error: {mse}")

# Plot the results

plt.figure(figsize=(10, 6))

plt.scatter(X_train, y_train, label='Training data', color='blue')

plt.scatter(X_test, y_test, label='Test data', color='green')

plt.scatter(X_test, y_pred, label='Predictions', color='red', marker='x')

plt.title('Kernel Regression with Regularization')

plt.xlabel('X')

plt.ylabel('y')

plt.legend()

plt.show()

Mean Squared Error: 199.19875538433809