Metric Learning#

The goal of metric learning is to adjust a pairwise distance metric, such as the Mahalanobis distance \( d_\mathbf{M}(x, y) = \sqrt{(x-y)^T \mathbf{M} (x-y)} \), to better suit the specific problem based on training examples.

Types of Constraints in Metric Learning

Metric learning methods typically rely on side information presented in the form of constraints such as:

Must-link / Cannot-link Constraints:

Must-link (Positive Pairs): These are pairs of examples \( (x_i, x_j) \) that should be similar. For instance, in a facial recognition task, different images of the same person would be considered a must-link pair.

Cannot-link (Negative Pairs): These are pairs of examples \( (x_i, x_j) \) that should be dissimilar, such as images of different people in the facial recognition task.

Formally, these constraints are denoted as:

\( S = \{(x_i, x_j) \mid x_i \text{ and } x_j \text{ should be similar}\} \)

\( D = \{(x_i, x_j) \mid x_i \text{ and } x_j \text{ should be dissimilar}\} \)

Relative Constraints (Training Triplets):

These involve triplets \( (x_i, x_j, x_k) \) where \( x_i \) should be more similar to \( x_j \) than to \( x_k \). Formally:

\( R = \{(x_i, x_j, x_k) \mid x_i \text{ should be more similar to } x_j \text{ than to } x_k\} \)

The Objective of Metric Learning#

A metric learning algorithm aims to find the parameters of the metric (in the case of the Mahalanobis distance, this means learning the matrix \( \mathbf{M} \)) so that the metric best aligns with the provided constraints.

Visualizing Metric Learning#

Imagine a situation where we’re trying to cluster images based on their content. Initially, the distance metric might not distinguish well between similar and dissimilar images. After metric learning, however, the learned metric should ensure that images of the same object are clustered together, while images of different objects are farther apart.

Metrics for Numerical Data#

For data points in a vector space \( X \subset \mathbb{R}^d \), the following distance metrics are commonly used:

Minkowski Distances:

Minkowski distances are defined by the \( L_p \) norms:

For specific values of \( p \):

Manhattan Distance (p=1):

Euclidean Distance (p=2):

Chebyshev Distance (p \to \infty):

Mahalanobis Distance:

The Mahalanobis distance accounts for feature correlations:

Where \( \Sigma \) is the covariance matrix of the data. In metric learning, it is often generalized as:

where \( M \) is a positive semi-definite matrix. When \( M \) is the identity matrix, it reduces to the Euclidean distance. This distance allows for data projections into lower dimensions.

Cosine Similarity:

Cosine similarity measures the cosine of the angle between vectors:

Mahalanobis Distance Learning#

Mahalanobis distance learning focuses on adapting the Mahalanobis distance, which is defined as:

where \( \Sigma \) is the covariance matrix of the data. In practice, the squared form is often used:

The goal is to learn the matrix \( \Sigma \) from the training data, where \( \Sigma \) must remain positive semi-definite (PSD) during optimization.

Key Approaches:

MMC (Xing et al., 2002):

Objective: Learn a Mahalanobis distance for clustering based on similar and dissimilar pairs.

Formulation: $\( \begin{aligned} \max_{M \in \mathcal{S}_d^+} \quad \sum_{(x_i, x_j) \in D} d^2(x_i, x_j) \\ \text{subject to} \\ &\sum_{(x_i, x_j) \in S} d^2(x_i, x_j) \le 1 \\ \end{aligned} \)$

Method: Uses a projected gradient descent algorithm, with projections onto the PSD cone.

Complexity: The PSD projection requires \( O(d^3) \) time, making it inefficient for large \( d \).

NCA ( neighborhood Component Analysis) (Goldberger et al., 2004):

Objective: Optimize the expected leave-one-out error ($ p_{ii}=0)for a stochastic nearest neighbor classifier in the projection space.

Probability Definition:

and \(p_{ii}=0\)

and \(p_{ii}=0\)

Probability \( x_i \) correctly classified:

The Mahalanobis distance is learned by maximizing the sum of probabilities:

The problem is solved using gradient descent, but the formulation is nonconvex, which can lead to local optima.

NCA Defect#

NCA primarily aims to improve the performance of k-nearest neighbors (k-NN) by learning a Mahalanobis distance that increases the probability of correctly classifying data points. However, its effectiveness can be limited by the non-linearity of the data, especially in cases like the “two moons” dataset.

import numpy as np

import matplotlib.pyplot as plt

from metric_learn import NCA

from sklearn.datasets import make_classification

# Step 1: Create a synthetic dataset with 2 classes

np.random.seed(42)

class_1 = 2*np.random.randn(50, 2) + np.array([2, 2]) # Class ω1 centered at (2, 2)

class_2 = np.random.randn(50, 2) + np.array([-2, -2]) # Class ω2 centered at (-2, -2)

X = np.vstack((class_1, class_2))

Y = np.hstack((np.zeros(50), np.ones(50))) # Labels: 0 for ω1, 1 for ω2

# Step 2: Apply NCA

nca = NCA(max_iter=1000)

X_nca = nca.fit_transform(X, Y)

# Step 3: Visualization

plt.figure(figsize=(10, 4))

# Before NCA

plt.subplot(1, 2, 1)

plt.scatter(X[Y == 0, 0], X[Y == 0, 1], color='r', label=r'$\omega_1$')

plt.scatter(X[Y == 1, 0], X[Y == 1, 1], color='b', label=r'$\omega_2$')

plt.title("Before NCA")

plt.xlabel("Feature 1")

plt.ylabel("Feature 2")

plt.legend()

# After NCA

plt.subplot(1, 2, 2)

plt.scatter(X_nca[Y == 0, 0], X_nca[Y == 0, 1], color='r', label=r'$\omega_1$')

plt.scatter(X_nca[Y == 1, 0], X_nca[Y == 1, 1], color='b', label=r'$\omega_2$')

plt.title("After NCA")

plt.xlabel("Transformed Feature 1")

plt.ylabel("Transformed Feature 2")

plt.legend()

plt.tight_layout()

plt.show()

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

Cell In[1], line 3

1 import numpy as np

2 import matplotlib.pyplot as plt

----> 3 from metric_learn import NCA

4 from sklearn.datasets import make_classification

6 # Step 1: Create a synthetic dataset with 2 classes

ModuleNotFoundError: No module named 'metric_learn'

Homework : set parameter of NCA such as \( \tau \)#

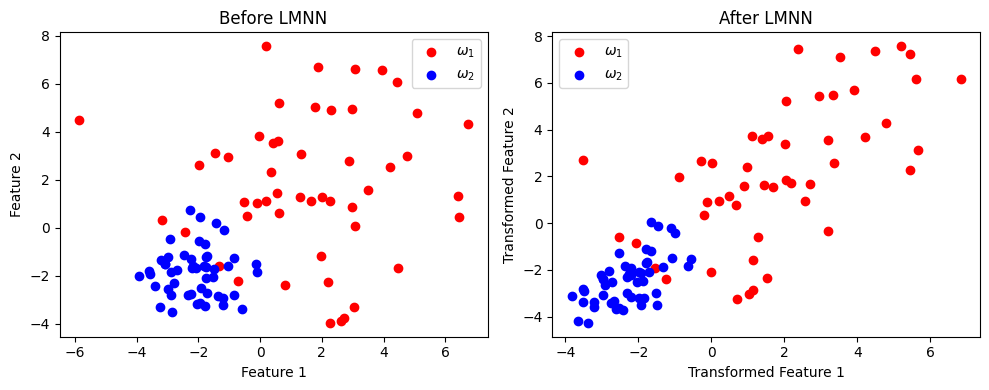

Large Margin Nearest Neighbor (LMNN)#

it is another popular metric learning algorithm that aims to improve the k-Nearest Neighbors (k-NN) classification by learning a Mahalanobis distance metric. The goal of LMNN is to ensure that:

Target neighbors (points of the same class) are close to each other in the transformed space.

Impostors (points from different classes) are pushed further apart by a large margin.

LMNN popularity is from the way the training constraints are defined, particularly in the context of k-NN classification. For each training instance (\( x \) ), the k nearest neighbors of the same class (referred to as “target neighbors”) are required to be closer than instances from other classes (referred to as “impostors”).

Formally, the constraints are defined as:

\( S \) is set of neighbor of \(i\)

The distance is learned using the following convex program:

where \( \lambda \in [0,1] \) controls the trade-off between pulling target neighbors closer together and pushing away impostors.

Although the number of constraints in this problem grows quickly with the number of data points \(n\), many constraints are often trivially satisfied.

Soft version of LMNN#

The distance is then learned using the following convex program:

subject to:

where \(\xi_{ijk} \geq 0\) and \( \lambda \in [0,1]\) controls the trade-off between pulling target neighbors closer together and pushing away impostors.

import numpy as np

import matplotlib.pyplot as plt

from metric_learn import LMNN

from sklearn.datasets import make_classification

# Step 1: Create a synthetic dataset with 2 classes

np.random.seed(42)

class_1 = 3*np.random.randn(50, 2) + np.array([2, 2]) # Class ω1 centered at (2, 2)

class_2 = np.random.randn(50, 2) + np.array([-2, -2]) # Class ω2 centered at (-2, -2)

X = np.vstack((class_1, class_2))

Y = np.hstack((np.zeros(50), np.ones(50))) # Labels: 0 for ω1, 1 for ω2

# Step 2: Apply LMNN

lmnn = LMNN(n_neighbors=1, learn_rate=1e-6)

X_lmnn = lmnn.fit_transform(X, Y)

# Step 3: Visualization

plt.figure(figsize=(10, 4))

# Before LMNN

plt.subplot(1, 2, 1)

plt.scatter(X[Y == 0, 0], X[Y == 0, 1], color='r', label=r'$\omega_1$')

plt.scatter(X[Y == 1, 0], X[Y == 1, 1], color='b', label=r'$\omega_2$')

plt.title("Before LMNN")

plt.xlabel("Feature 1")

plt.ylabel("Feature 2")

plt.legend()

# After LMNN

plt.subplot(1, 2, 2)

plt.scatter(X_lmnn[Y == 0, 0], X_lmnn[Y == 0, 1], color='r', label=r'$\omega_1$')

plt.scatter(X_lmnn[Y == 1, 0], X_lmnn[Y == 1, 1], color='b', label=r'$\omega_2$')

plt.title("After LMNN")

plt.xlabel("Transformed Feature 1")

plt.ylabel("Transformed Feature 2")

plt.legend()

plt.tight_layout()

plt.show()

Noise Handling Strategy#

In the above example, we can address this by adjusting the value of \( k \).

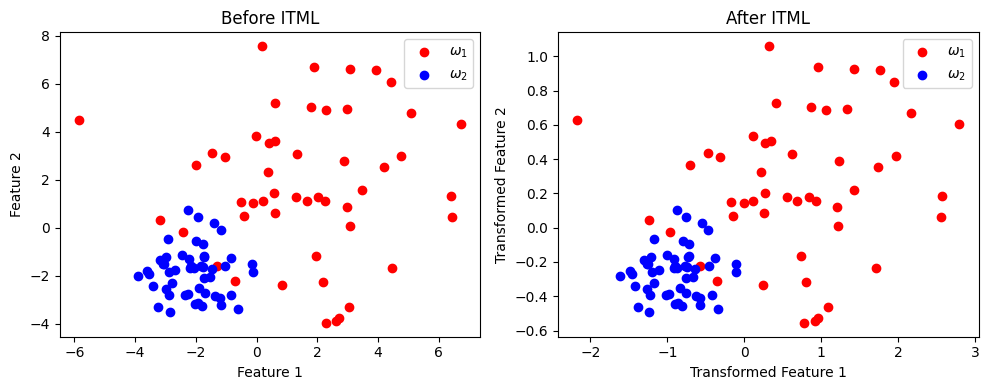

Miniproject: ITML (Davis et al.) Information-eoretic Metric Learning (ITML)#

expalin and correct following text

present to classroom

Miniproject: change divergence into ITML#

We quantify the measure of “closeness” between two distribution function with equal means and covariance of \( A^{-1} \) and \( A_0^{-1} \).

Given a distribution function parameterized by \( A \), the corresponding multivariate Gaussian distribution is:

where \( Z \) is a normalizing constant and \( A^{-1} \) is the covariance of the distribution. The distance between two distribution function parameterized by \( A \) and \( A_0 \) is measured by the (differential) relative entropy between their corresponding Gaussians:

This measure provides a well-founded quantification of “closeness” between two probability density functions.

Given pairs of similar points \( S \) and dissimilar points \( D \), the distance metric learning problem is formulated as:

subject to:

Algorithm of ITML#

We demonstrate that our information-theoretic objective for metric learning can be expressed using a specific type of Bregman divergence, enabling us to apply Bregman’s method for solving the metric learning problem. We also show an equivalence to a recent low-rank kernel learning problem, allowing for kernelization of the algorithm.

Metric Learning as LogDet Optimization#

The LogDet divergence is a Bregman matrix divergence generated by the convex function \(\phi(X) = - \log \det X\) defined over the cone of positive-definite matrices. For \(n \times n\) matrices \(A\) and \(A'\), it is given by:

The differential relative entropy between two multivariate Gaussians can be expressed as a combination of a Mahalanobis distance between mean vectors and the LogDet divergence between covariance matrices:

The LogDet divergence, also known as Stein’s loss, is invariant under scaling and invertible linear transformations:

Using this equivalence, we can reframe the distance metric learning problem as a LogDet optimization problem:

subject to:

To address the possibility of infeasible solutions, slack variables \(\xi\) are introduced:

subject to:

Here, \(\gamma\) controls the trade-off between constraint satisfaction and minimizing \(D_{\ell d}(A, A')\).

To solve this optimization problem, we extend methods from low-rank kernel learning, which involves Bregman projections:

where \(x_i\) and \(x_j\) are constrained data points, and \(\beta\) is the projection parameter. Each projection operation costs \(O(d^2)\), making a single iteration \(O(cd^2)\). The algorithm avoids eigen-decomposition, and projections can be efficiently computed over a factorization \(W\) of \(A\), where \(A = W^T W\).

Algorithm: Information-Theoretic Metric Learning#

Input:

\( X \): Input \( d \times n \) matrix

\( S \): Set of similar pairs

\( D \): Set of dissimilar pairs

\( u \), \( \ell \): Distance thresholds

\( A_0 \): Input Mahalanobis matrix

\( \gamma \): Slack parameter

\( c \): Constraint index function

Output:

\( A \): Output Mahalanobis matrix

Procedure:

Initialize:

\( A \leftarrow A_0 \)

\( \lambda_{ij} \leftarrow 0 \) for all \( i, j \)

Set \( \xi_{c(i,j)} \leftarrow u \) for \( (i, j) \in S \); otherwise, set \( \xi_{c(i,j)} \leftarrow \ell \)

Repeat until convergence:

Pick a constraint \( (i, j) \in S \) or \( (i, j) \in D \)

Compute \( p \leftarrow (x_i - x_j)^T A (x_i - x_j) \)

Set \( \delta \leftarrow 1 \) if \( (i, j) \in S \); otherwise, set \( \delta \leftarrow -1 \)

Compute: $\( \alpha \leftarrow \min \left( \lambda_{ij}, \frac{\delta}{2} \left( \frac{1}{p} - \frac{\gamma}{\xi_{c(i,j)}} \right) \right) \)$

Compute: $\( \beta \leftarrow \frac{\delta \alpha}{1 - \delta \alpha p} \)$

Update: $\( \xi_{c(i,j)} \leftarrow \frac{\gamma \xi_{c(i,j)}}{\gamma + \delta \alpha \xi_{c(i,j)}} \)$

Update: $\( \lambda_{ij} \leftarrow \lambda_{ij} - \alpha \)$

Update: $\( A \leftarrow A + \beta A (x_i - x_j) (x_i - x_j)^T A \)$

Return:

\( A \)

import numpy as np

import matplotlib.pyplot as plt

from metric_learn import ITML_Supervised

from sklearn.datasets import make_classification

# Step 1: Create a synthetic dataset with 2 classes

np.random.seed(42)

class_1 = 3 * np.random.randn(50, 2) + np.array([2, 2]) # Class ω1 centered at (2, 2)

class_2 = np.random.randn(50, 2) + np.array([-2, -2]) # Class ω2 centered at (-2, -2)

X = np.vstack((class_1, class_2))

Y = np.hstack((np.zeros(50), np.ones(50))) # Labels: 0 for ω1, 1 for ω2

# Step 2: Apply ITML_Supervised

itml = ITML_Supervised()

X_itml = itml.fit_transform(X, Y)

# Step 3: Visualization

plt.figure(figsize=(10, 4))

# Before ITML

plt.subplot(1, 2, 1)

plt.scatter(X[Y == 0, 0], X[Y == 0, 1], color='r', label=r'$\omega_1$')

plt.scatter(X[Y == 1, 0], X[Y == 1, 1], color='b', label=r'$\omega_2$')

plt.title("Before ITML")

plt.xlabel("Feature 1")

plt.ylabel("Feature 2")

plt.legend()

# After ITML

plt.subplot(1, 2, 2)

plt.scatter(X_itml[Y == 0, 0], X_itml[Y == 0, 1], color='r', label=r'$\omega_1$')

plt.scatter(X_itml[Y == 1, 0], X_itml[Y == 1, 1], color='b', label=r'$\omega_2$')

plt.title("After ITML")

plt.xlabel("Transformed Feature 1")

plt.ylabel("Transformed Feature 2")

plt.legend()

plt.tight_layout()

plt.show()

Miniproject: Nonlinear Metric Learning#

introducing AE based joint with Multidimensional Scaling (MDS) method

code with suitable Lib

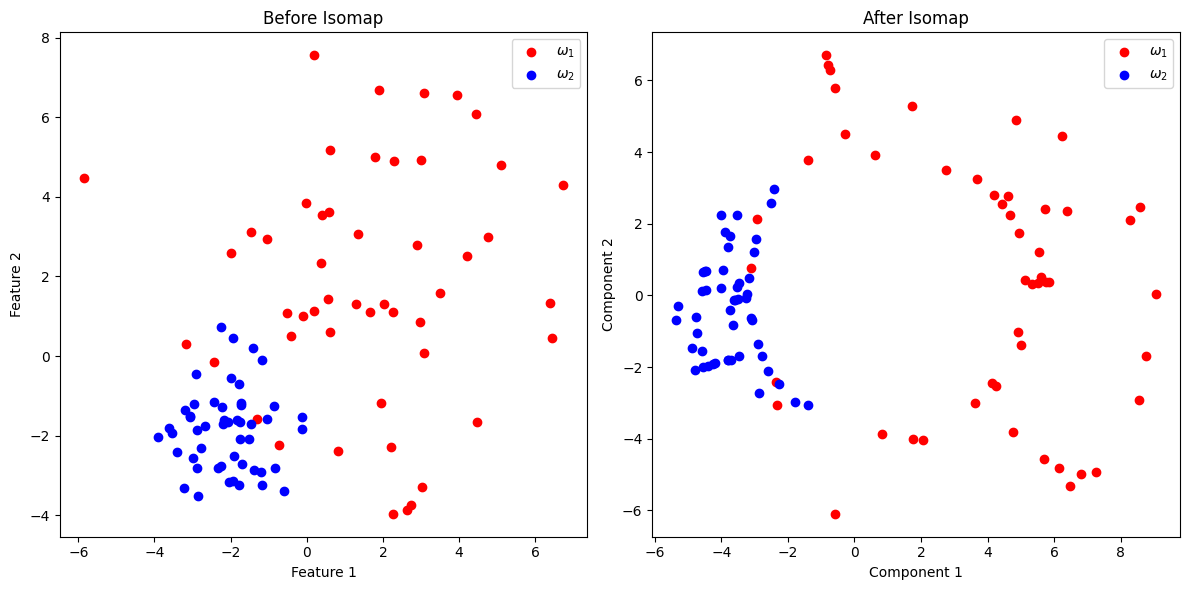

Homework: Course Discussion on the Following Code#

Isomap is applied to reduce the dimensionality of the dataset while capturing nonlinear relationships.

import numpy as np

import matplotlib.pyplot as plt

from sklearn import manifold

from sklearn.datasets import make_classification

# Step 1: Create a synthetic dataset with 2 classes

np.random.seed(42)

class_1 = 3 * np.random.randn(50, 2) + np.array([2, 2]) # Class ω1 centered at (2, 2)

class_2 = np.random.randn(50, 2) + np.array([-2, -2]) # Class ω2 centered at (-2, -2)

X = np.vstack((class_1, class_2))

Y = np.hstack((np.zeros(50), np.ones(50))) # Labels: 0 for ω1, 1 for ω2

# Step 2: Apply Isomap for nonlinear dimensionality reduction

isomap = manifold.Isomap(n_neighbors=3, n_components=2)

X_isomap = isomap.fit_transform(X)

# Visualization

plt.figure(figsize=(12, 6))

# Before Isomap

plt.subplot(1, 2, 1)

plt.scatter(X[Y == 0, 0], X[Y == 0, 1], color='r', label=r'$\omega_1$')

plt.scatter(X[Y == 1, 0], X[Y == 1, 1], color='b', label=r'$\omega_2$')

plt.title("Before Isomap")

plt.xlabel("Feature 1")

plt.ylabel("Feature 2")

plt.legend()

# After Isomap

plt.subplot(1, 2, 2)

plt.scatter(X_isomap[Y == 0, 0], X_isomap[Y == 0, 1], color='r', label=r'$\omega_1$')

plt.scatter(X_isomap[Y == 1, 0], X_isomap[Y == 1, 1], color='b', label=r'$\omega_2$')

plt.title("After Isomap")

plt.xlabel("Component 1")

plt.ylabel("Component 2")

plt.legend()

plt.tight_layout()

plt.show()

Miniproject: Metric Learning by Contrastive loss#

Metric Learning aims to map objects into an embedded space where distances reflect their similarities, with contrastive loss specifically ensuring that similar objects are close and dissimilar ones are farther apart by maintaining a margin. Additionally, triplet loss ensures that an anchor sample is closer to positive samples than to negative ones.

Some useful link

Digging Deeper into Metric Learning with Loss Functions

Center Contrastive Loss for Metric Learning

Useful References

Metric Learning

Aurélien Bellet, Télécom ParisTech

Amaury Habrard, Université de Saint-Étienne

Marc Sebban, Université de Saint-Étienne

Metric Learning: A Survey

Brian Kulis